2009-12-03 – ekey happiness

In my last post about the ekey, I complained about two things: memory leak in the server and missing reconnects if the client was disconnected for any reason. I’ve meaning to blog about the follow up for while, but haven’t had the time before now.

Quite quickly after my blog post, Simtec engineers got in touch on IRC and we worked together to find out what the memory leak problem was. They also put in the reconnect support I asked for. All this in less than a week, for a device which only cost £36.

To make things even better, they picked up some other small bug

fixes/requests from me, such as making ekeyd-egd-linux just Suggest

ekeyd and the latest release (1.1.1) seems to have fixed some more

problems.

All in all, I’m very happy about it. To make things even better, Ian

Molton (of Collabora) has been busy fixing up virtio_rng in the

kernel and adding EGD support (including reconnection support) to qemu

and thereby KVM. Hopefully all this hits the next stable releases and

I can retire my egd-over-stunnel hack.

2009-11-05 – Package workflow

As 3.0 format packages are now allowed into the archive, I am thinking about what I would like the workflow to look like and hoping one of them fits me.

For new upstream releases, I am imaginging something like:

-

New upstream version is released.

-

git fetch+ merge into upstream branch. -

Import tarballs, preferably in their original format (bz2/gzip), using

pristine-tar. -

Merge upstream to debian branch. Do necessary fixups and adjustments. At this point, the upstream..debian branch delta is what I want to apply to the upstream release. The reason I need to apply this delta is so I get all generated files into the package that’s built and uploaded.

-

The source package has two functions at this point: Be a starting point for further hacking; and be the source that buildds use to build the binary Debian packages.

For the former, I need the git repository itself. It is increasingly my preferred form of modification and so I consider it part of the source.

For the latter, it might be easiest just to ship the

orig.tar.{gz,bz2}and the upstream..debian delta. This does require the upstream..debian delta not to change any generated files, which I think is a fair requirement.

I’m not actually sure which source format can give me this. I think

maybe the 3.0 (git) format can, but I haven’t played around with it

enough to see. I also don’t know if any tools actually support this

workflow.

2009-11-02 – Distributing entropy

Back at the Debian barbeque party at the end of August, I got myself

an EntropyKey from the kind folks at Simtec. It has

been working so well that I haven’t really had a big need to blog

about it. Plug it in and watch

/proc/sys/kernel/random/entropy_avail never empty.

However, Collabora, where I am a sysadmin also got one. We are using a few virtual machines rather than physical machines as we want the security domains, but don’t have any extreme performance needs. Like most VMs they have been starved from entropy. One problem presents itself: how do we get the entropy from the host system where the key is plugged in to the virtual machines?

Kindly enough the ekeyd package also includes ekeyd-egd-linux

which speaks EGD, the TCP protocol the Entropy Gathering Daemon

defined a long time ago. ekeyd itself can also output in the same

protocol, so this should be easy enough, or so you would think.

Our VMs are all bridged together on the same network that is also exposed to the internet and the EGD protocol doesn’t support any kind of encryption, so in order to be safe rather than sorry, I decided to encrypt the entropy. Some people think I’m mad for encrypting what is essentially random bits, but that’s me for you.

So, I ended up setting up stunnel, telling ekeyd on the host to

listen to localhost on a given port, and stunnel to forward

connections to that port. On each VM, I set up stunnel to forward

connections from a given port on localhost to the port physical

machine where stunnel is listening. ekeyd-linux-egd is then told to

connect to the port on localhost where stunnel is listening. After a

bit of certificate fiddling and such, I can do:

# pv -rb < /dev/random > /dev/null

17.5kB [4.39kB/s]

which is way, way better than what you will get without a hardware RNG. The hardware itself seems to be delivering about 32kbit/s of entropy.

My only gripes at this point is that the EGD implementation could use a little bit more work. It seems to leak memory in the EGD server implementation. Also, it would be very useful if the client would reconnect if it was disconnected for any reason. Even with those missing bits, I’m happy about the key so far.

2009-07-02 – Airport WLAN woes

Dear whoever runs the Telefonica APs in both Rio de Janeiro and Sao Paulo airports: Your DNS servers are returning SERVFAIL and has been doing so for quite a while. This is not helpful, perhaps you should set up some monitoring of them?

2009-06-19 – Rendering GPX files using libchamplain and librest

A little while ago, I read robster’s post about librest, and it looked quite neat. I have had a plan for visualising GPX files for quite a while, hopefully with something that allows you to look at data for various bits of a track, like speed and the time you where there, but for various reasons, I haven’t had the time before.

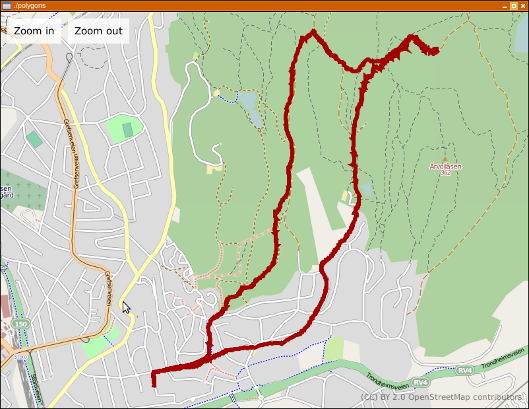

Last night, I took a little time to glue librest and libchamplain together. libchamplain is a small gem of a library, quite nice to work with and with the 0.3.3 release, it got support for rendering polygons. The result is in this screenshot:

This is about 160 lines of C, all included.

2008-12-17 – varnishlog's poor man's filtering language

Currently, varnishlog does not support very advanced filtering. If

you run it with -o, you can also do a regular expression match on tag

- expression. An example would be

varnishlog -o TxStatus 404to only show log records where the transmitted status is 404 (not found).

While in Brazil, I needed something a bit more expressive. I needed

something that would tell me if I had vcl_recv call pass and the URL

ended in .jpg.

varnishlog -o -c | perl -ne 'BEGIN { $/ = "";} print if

(/RxURL.*jpg$/m and /VCL_call.*recv pass/);'

fixed this for me.

2008-12-15 – Ruby/Gems packaging (it's java all over again)

It is sad to see how people complain about

how packaging Ruby gems is painful. It seems like it is the Java

packaging game all over again where any application ships its

dependencies in a lib/ directory (or in the case of Rails,

vendor/). Mac OS X applications seem to do some of the same thing

by shipping lots of libraries in their application bundle, which is

really just a directory with some magic files in it.

This is of course just like static linking, which we made away with for most software many years ago, mostly due to the pain associated with any kind of security updates.

Update: What I find sad is that people keep making the same mistakes we made and corrected years ago, not that people are complaining about those mistakes.

2008-11-28 – !Internet

This is my current internet connectivity. Yay, or something.

2008-11-24 – How to handle reference material in a VCS?

I tend to have a bunch of reference material stored in my home directory. Everything from RFCs, which is trivial to get at again using a quick rsync command (but immensely useful when I want to look up something and am not online) to requirements specifications for systems I made years and years ago.

If I didn’t use a VCS, I would just store those in a directory off my

home directory, to be perused whenever I felt the need. Now, with a VCS

controlling my ~, it feels like I should be able to get rid of those,

and just look them up again if I ever need them. However, this poses a

few problems, such as “how do you keep track of not only what is in a

VCS, but also what has ever been there”. Tools like grep doesn’t work

so well across time, even though git has a grep command too, it still

doesn’t cut it for non-ASCII data formats.

Does anybody have a good solution to this problem? I can’t think I’m the only one who have the need for good tools here.

2008-11-16 – network configuration tools, for complex networks

Part of my job nowadays is regular Linux consulting for various clients. As part of this, I end up having to reconfigure my network quite a lot, and often by hand. Two examples:

I am setting up some services on a closed server network. I am

connected to this using regular, wired Ethernet. This network does not

have access to the internet, so I also have access to a WLAN which does.

However, I need access to both internal and external DNS zones, so I

need to use two different set of servers, depending on the domain name.

I also currently set up some static routes to get to the internal DNS

and have to chattr +i /etc/resolv.conf in order for the DHCP client

not to overwrite my manually configured DNS.

Another example: I am troubleshooting Varnish, deep in a company’s

intranet. To get access to this, I first have to use a VPN client, then

ssh with an RSA token as well as password. From there on, I use

tsocks to connect to an intermediate host before using tsocks again

to get to the actual Varnish boxes.

Currently, I set up all of this infrastructure by hand, which doesn’t really work so well when I switch between clients and go home and use the same laptop there. Does any network configuration tools help me with complex setups such as the ones above? I realise they are not exactly run-of-the-mill setups used by most users, but for me they are two fairly common examples of setups I need.